Enterprise Generative AI: Build vs. Buy

Generative AI (GenAI) is poised to catalyze innovation and revolutionize customer experience across all business sectors. The question is not whether you should implement a GenAI solution in your organization, but how best to implement one, and quickly.

Executive Summary

Nearly every company can gain value from Generative AI solutions. But should you build your own or buy one off the shelf?

-

Enterprises are often impressed by proof-of-concept demonstrations, but drastically misjudge the time and cost to turn these into full enterprise-ready solutions.

-

An enterprise GenAI solution is not just an LLM, but consists of many layers. Each one can bring unexpected challenges.

-

Leveraging the expertise of a GenAI platform can drastically reduce costs and accelerate time-to-market.

-

Buying solutions is often associated with vendor lock-in, but with a field moving as fast as generative AI, buying can actually give you more flexibility than building your own and being stuck with a single LLM.

Introduction

The time for GenAI is now.

Incorporating GenAI into your business will drive operational excellence, open new avenues for growth and innovation, and enable customized services at scale, making GenAI a strategic imperative for forward-thinking organizations that aim to stay competitive in a rapidly evolving digital landscape.

If you have a strong technical team with a solid track record of building digital solutions for your business, you might be tempted to build your GenAI solution from scratch. But developing GenAI in-house presents many challenges, including substantial investments in expertise, infrastructure, and ongoing maintenance. The complexities of developing and training generative AI models demand a highly specialized skill set that can be difficult and costly to acquire, and staying abreast of rapidly evolving AI technologies and ensuring your solution is being used securely and ethically requires continuous effort and resources.

This is not the first time that enterprises have rushed to build AI solutions for customer support. Around 2015, many enterprises built their own virtual assistants in-house, and nearly all of those failed. Even companies like Meta and Microsoft faced spectacular failures with M (Facebook's virtual assistant started in 2015 and discontinued in 2018) and Tay (a chatbot Microsoft launched and quickly shut down in 2016 after multiple problems).

Many banks and other companies also attempted to quickly build their own AI solutions in-house and spent millions of dollars and many years of ultimately wasted development effort. While companies do not like publicly discussing their failed projects, it’s likely that of every 100 attempted AI solutions, only three or four ended up in production, and a majority of these were later discontinued.

In the end, it’s common for a company to spend $2-3 million dollars and two or more years to try and fail to develop a solution in-house that could have been done by a vendor at a 10x lower cost and a time frame measured in months.

Fortunately, the current surge in GenAI means you have unprecedented access to scalable, customizable, and efficient solutions to integrate into your operations, whether you choose to buy an off-the-shelf solution or design a hybrid approach that combines externally sourced tools and platforms with custom-developed in-house solutions

Let's break down the different layers that make up enterprise GenAI solutions to help you decide whether you want to build or buy your GenAI solution, or something in between.

The often underestimated gap between a proof-of-concept and an enterprise-grade solution

With the explosion in AI tools, it's very easy to build a quick demonstration of a generative AI solution. Because you can take some PDFs and 'chat' with them using LangChain or any of a plethora of other tools, it's common for teams to get an initial 'wow' factor and then completely misjudge the gap between that demo and a production solution.

This leads to internal teams committing to building a solution in unrealistic time frames, and companies releasing solutions that are completely unfit for purpose, resulting in many embarrassing and costly mistakes, such as the now infamous case of DPD releasing an AI agent that would happily disparage its owner if asked.

Enterprise features such as an input guard (filtering the user's messages) and an output guard (filtering the AI's messages) would have prevented this.

In another example, a GM dealer was forced to deactivate their chatbot after it offered to sell a car for $1 in what the bot claimed to be a legally binding offer.

The fact is, there is a mountain of complexity between the simplest demonstration of a cute AI bot, and an enterprise solution. This is seen in the explosion of AI startups that have raised millions of dollars to try to solve very specific parts of the challenge of building your own GenAI solution in-house.

The LLM has the 'wow' factor for GenAI solutions, but it's only a small piece of the overall complexity

Why building AI is different from building software

Although you'll need a strong software competency in-house to even consider building your own GenAI solution, many companies find out the hard way that being good at building in-house AI solutions is not the same as traditional software engineering.

With software, the focus is on code. It's easy and cheap for developers to test code and integrate it into a complete solution. It's also fairly straightforward to host and run that code.

AI solutions have a large software component, but they also require the managing of models and datasets at a scale that most software engineering teams have not experienced. Developing and testing features is a lot harder, as experiments might need to run for weeks on expensive servers before results can be seen.

Even engineers who have experience with machine learning are often unequipped with the skills to build and evaluate AI models. In machine learning, you can test your solution by having a predicted result and a gold-standard answer. To test how good your solution is, you verify that 𝒙 = 𝓧—that what you generated is the same as what you expected, or at least very close

GenAI testing demands a more sophisticated framework. Your AI agent might generate a correct answer that means exactly the same thing as your example gold-standard answer but looks very different, as the agent could have used different words and a different sentence structure than the example answer while conveying the correct meaning.

Datasets are usually much bigger: Engineers have to handle petabytes of data, and many of their usual tools cannot easily view or manipulate the underlying files.

Even once a model is trained, developers need to work out how to do inference efficiently, otherwise, it can take minutes for the AI solution to process each interaction.

Beyond initial development, continuously ensuring that AI models improve over time requires a robust framework for monitoring accuracy metrics. This involves rigorously testing models across different conditions to statistically verify improvements, eliminating reliance on guesswork. It's about using data-driven insights to continually improve models, ensuring that the software not only maintains but also enhances its performance based on real-world feedback and evolving data.

The AI development process needs a deeper statistical understanding and application—a distinct shift from conventional software engineering practices where outcomes are more predictable. Developers who are primarily used to managing and developing code might not have the experience required to also manage data, models, hardware, and inference.

Beyond deployment: Maintenance and evolution

When it comes to weighing up build versus buy options for Generative AI solutions, many of the same tradeoffs that apply to buying or building any system come into play, though they are often subtly different when it comes to GenAI.

For example, you need to decide whether you want to be responsible for maintenance on your solution, or to have that handled by someone else. While in many cases, enterprises choose to take on a maintenance burden to keep development in-house, with GenAI solutions, they need to take into account additional considerations. For example

-

Talent scarcity: maintenance often needs to be done by highly experienced AI engineers. There’s a skills shortage for people who can do this, so it’s not always just about maintaining the extra headcount, but about finding experts who can solve your problems.

-

Speed of evolution: with other solutions, you may be able to do maintenance every year, or even every few years. The GenAI market is moving so fast that you need to keep up with integrating new models, and other technology that might have a lifecycle measured in weeks.

By buying into a mature platform, you gain not only the benefits of this maintenance being done for you, but also benefit from fixes done for other customers of that platform. If a specific customer has problems with hallucinated responses, the platform can roll out a fix for that to all customers, even the ones that have not yet experienced the problem.

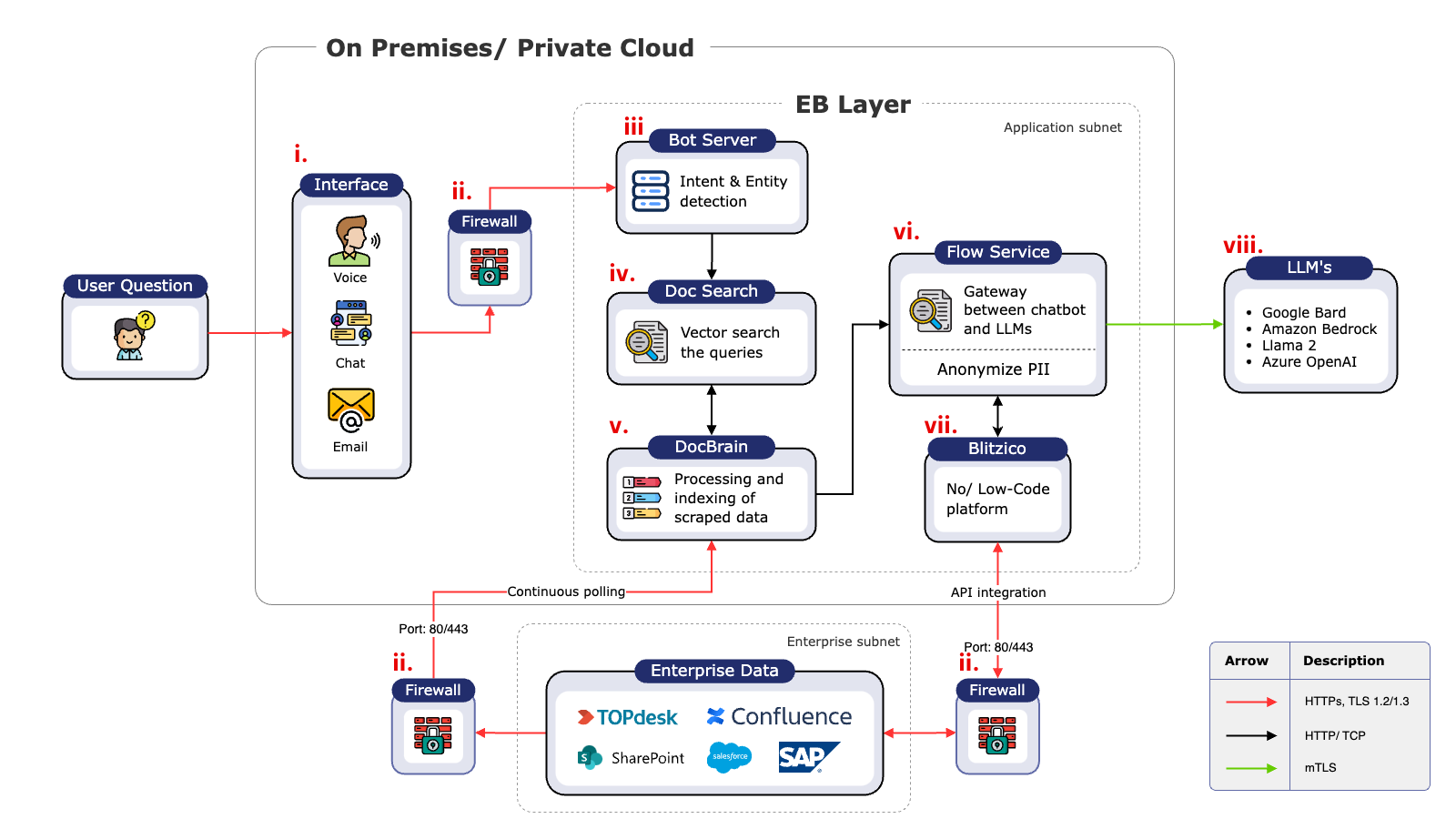

Understanding the layers of a GenAI solution

You might think of a GenAI solution as a cohesive whole, but in reality, these systems are built from distinct layers, starting with the large language model (LLM) at the base.

Layer 1: Foundational LLM model: The LLM at the core of your GenAI solution, for example, GPT, Gemini, Mistral, Llama 3, or Claude.

Layer 2: RAG platform and conversational flows: A retrieval-augmented generation (RAG) platform that augments the base model with your enterprise knowledge base and company data, for example, Azure RAG or Doc Brain.

Layer 3: Frontend: The interface your users will use to interact with the GenAI system, for example, Intercom, email, WhatsApp, a custom web widget, or an omnichannel solution that lets users choose how to interact with the AI.

Layer 4: Enterprise features: The advanced functionalities, capabilities, and support mechanisms that cater to your specific needs and address your business's scale, complexity, security, compliance, integration, and operational requirements, for example, user management, regulatory and governance compliance, access governance, PPI anonymization, content filtering, and SLAs.

Layer 5: Integrations: At the pinnacle of the enterprise GenAI structure, integrations are the connections between the GenAI system and other business systems and applications that allow for automated workflows, enhanced data flow, and seamless communication with existing technologies and processes, for example, Genesys, Sharepoint, Confluence, and Teams.

Each of these layers can be built internally or bought off the shelf. If you want to mix and match by buying each layer separately or building certain elements in-house, you'll still need to put in significant effort to combine the parts into a cohesive whole.

Layer 1: Foundational LLM model for enterprise GenAI

While building your own LLM from scratch is technically possible, it's not feasible to compete with OpenAI, Anthropic, Google, and a handful of other well-resourced players in this space. Developing a model takes years of research and development, and millions of dollars.

For this layer of your GenAI solution, you have a few options.

Buy a higher-level solution that includes LLM access. If you buy a complete solution off the shelf, it will likely come with a foundational model or an option for you to pick between several.

Contract with an LLM provider directly. You can purchase credits or enterprise-level agreements with providers such as OpenAI, Microsoft, or Google directly. You will then get access to an API where you can send messages and receive completions from the LLM.

Build on top of an open-source model. While it is possible to build on open-source models like Llama 3, these LLMs are a lot harder to use than people expect. Even after correctly deploying and fine-tuning open-source models and building the supporting infrastructure, the quality of open-source LLM generation does not come close to that of proprietary models. For example, GPT-4 scores 67% on the popular HumanEval benchmark, compared to the Llama 2 score of 29.9%.

Build your own model from scratch. Building your own model from scratch is seldom the best option but if you were to choose this route, you would need:

-

Software: A programming language like Python, a deep learning framework like Pytorch or Tensorflow.

-

Hardware: Primarily GPUs for training and inference, purchased from NVIDIA or rented from a cloud provider like AWS.

-

Data: The Pile is an 800 GB text dataset that is a popular starting point for training LLMs.

-

Algorithms: The exact training methods of the best LLMs remain a secret, but depend on algorithms such as the transformer deep-learning architecture.

Unexpected challenges you might experience while building your own layer include long training times (sometimes weeks or months), expensive hardware that is in short supply, and a talent shortage of engineers who know how to train or fine-tune LLMs.

Layer 2: RAG, intent building, and conversational flow platforms for enterprise GenAI

Foundational LLM models have access to a vast array of information, but it's not complete. GPT-4 doesn't know anything that happened in the world after April 2023, and doesn't have access to your company-specific data and knowledge base unless this was available publicly on the internet when GPT was trained.

To add up-to-date or proprietary information to your GenAI solution, you'll need a Retrieval augmented generation (RAG) platform.

Once your AI is augmented with up-to-date or company-specific information, you'll also need to design specific conversational flows using intents and other conversational actions.

Your options for the RAG layer of your enterprise GenAI platform are to build your own, buy a RAG-specific solution, or integrate a higher-level solution that includes RAG functionality.

Regardless of the route you choose to follow for the RAG layer of your enterprise GenAI system, one of the challenges you'll face will be automating updates to the model's knowledge base. When information in your enterprise repositories changes—on your website, or in SharePoint or Confluence—these updates must automatically reflect in your RAG solution to maintain accuracy. Off-the-shelf RAG platforms don't typically provide this automation, and creating a system that seamlessly updates to capture new content without manual intervention adds complexity to development.

Resources for building your own RAG platform.

To build a RAG platform, you'll need:

-

A vector database such as Qdrant, PineCone, or PGVector. There are dozens of proprietary, open-source, and open-core solutions available, so you'll need to carefully assess which one best fits your needs.

-

A framework like LangChain to load and parse your data, chunk it appropriately, and turn it into vectors.

-

A data warehouse like Amazon Redshift, Snowflake, or Apache Doris. Again, there are many proprietary and open-source solutions.

-

A workflow management solution like Apache Airflow to manage your data pipelines and keep your vector database updated.

To add intents, you'll need to use a conversational platform like Rasa or Microsoft Bot Framework. This will let you architect conversational patterns, to help structure the dialog of your AI solution and keep it focused on solving customer problems.

Off-the-shelf RAG solutions

Microsoft and Amazon offer Azure AI Search and AWS Kendra respectively, but in reality, these are much lower-level building blocks than they appear, and won't save you much time compared to building your own from scratch, as you'll still need to do the heavy-lifting when it comes to ingesting your data, setting up connectors, and dealing with edge cases, such as correctly parsing tables in PDF files.

Basic RAG platforms like Azure AI Search offer a straightforward approach to data ingestion, but they fall short when it comes to leveraging metadata to enrich RAG results. If your bot has ingested some information from a large knowledge base, it still needs access to detailed metadata so that it can also provide extra context to the user about where that information originated.

Critical elements such as titles, product information, or other contextual factors play a significant role in refining RAG outputs, and incorporating these elements into a simple RAG system requires bespoke coding solutions and ongoing maintenance responsibilities.

On top of that, you'll likely run into complications when it comes to correctly chunking your data, dealing with different file formats and encodings, and deduplication.

Startups in the RAG space

There are startups like Arcee and Deepset that have raised millions of dollars, showing how complicated just this component can get. They provide dedicated solutions in the RAG space, letting you build and customize your own RAG platform with your own custom data.

Once again, it's easy to be fooled by the 'getting started' demos. When it comes to actually using a RAG platform, you'll need to spend thousands of engineering hours in cleaning your data, tweaking configuration, and experimenting with different options like using vector databases, full-text search, or a hybrid approach.

Galileo, a startup that focuses on observability, has a detailed post outlining how complicated a RAG system can get with components such as query rewriting, ranking, caching, guardrails, and many others, each of which can be individually optimized.

Layer 3: Frontend for enterprise GenAI

If your GenAI solution is customer-facing, you'll need to put some thought into how it looks. In many cases, your solution will have several faces depending on your use case. Maybe your customers can interact with a chatbot on your website and receive AI-generated messages on support platforms like Zendesk. Your employees might also have integrations built into back-office systems to make it easy for them to tag-team with the AI to provide hybrid responses.

Building your own frontend or interface for your GenAI solution depends heavily on your existing software stack, and once again, you have the choice of building from scratch, buying a component, or buying a complete solution.

Building from scratch. You'll need a frontend framework like React or Next.js or the capability to use components and libraries such as FastChat. You'll also need to build a fast backend using tools like Redis and store the chat data in a database such as Postgres.

To optimize GenAI for interactions, it's critical to customize interfaces both for end users and enterprise agents. For end users, the UI should indicate whether they are interacting with an AI or a human, adapting to specific scenarios to improve understanding and trust. For instance, in customer support settings, such as banking, the UI could offer visual cues on messages to clarify the nature of the interaction. Should a human be required to step in, the UI should inform the end user that they are interacting with an agent.

On the enterprise side, agents should receive customized UI alerts based on the context of the user they are assisting. For example, if a customer requests a significant transaction, the system could automatically flag any suspicious activity and notify an agent to intervene.

Buying a chatbot component. Proprietary components like Intercom or open-source alternatives like Papercups offer chatbot widgets. If you already use a CRM like Salesforce, it may come with a chatbot widget that you could integrate with.

Layer 4: Enterprise features for GenAI

Many of the open-source or self-hosted building blocks for getting started with building your own GenAI solution do not take into account enterprise-specific needs like privacy, security, data governance, and certifications.

Enterprises need to protect themselves from practical concerns and legal risks when it comes to building GenAI solutions. This applies to:

-

Data privacy: The data fed into the GenAI solution. Normally you'll need to run a PII (personal identifiable information) anonymization pipeline on any data before it gets ingested.

-

Guardrails: You'll need to carefully think about what you let users say to your AI, and what you let your AI say to your users. Guardrails, Laerka, Aim, and Prompt have raised millions of dollars to help companies secure their AI solutions, showing just how challenging this seemingly simple problem can be.

-

AI-generated data: The data generated by the GenAI solution. A small claims court recently forced Air Canada to abide by a promise its chatbot made to a customer, underscoring the importance of grounding in AI, the process of aligning the system's outputs with verifiable facts and the organization's capabilities to minimize the risk of misleading or inaccurate information.

-

Compliance: Various AI-specific and AI-adjacent policies and frameworks, such as SOC 2 certification, GDPR compliance, the EU AI Act, the upcoming US AI Bill of Rights, and a plethora of others.

Similarly to the previous layers, you have a few options when it comes to enterprise features for your GenAI solution. You can:

Build the required safeguards and get your own certifications. This can take months or years to prepare for, and often involves lengthy feedback cycles while auditors find gaps in your processes that need to be addressed. In addition, your team will need to research and stay up-to-date with the evolving legal requirements specific to AI development, a critical step that intertwines with compliance efforts. You'll also need your team to work cross-functionally across product, engineering, and legal to check every compliance box.

Get security and compliance help from a partner. Many agencies can help you get SOC 2 or ISO 27001:2022 compliance, perform penetration testing on your in-house solution, and consult about privacy and compliance, but none provides a panacea. In the end, you will still need to do the legwork to ensure that you understand the risks associated with GenAI and can guard against them, and convince regulatory authorities that you have done so.

Besides compliance, the enterprise features of your GenAI solution are critical mechanisms that ensure the system not only functions effectively within its technical and legal boundaries, but also remains adaptable, user-friendly, and transparent.

Among enterprise feature considerations like scalability, flexibility, performance, reliability, and monitoring and analytics, you'll want to pay particular attention to:

-

Prompting and LLM Validation: Devising precise prompts and validating LLM outputs are crucial for tailoring responses and ensuring the relevance and accuracy of the information provided.

-

Audit Trails: Essential for tracking changes, usage, and interactions with the GenAI system, providing transparency and accountability.

-

Retraining workflows: Beyond static LLM applications, integrating retraining processes and workflows ensures that the GenAI solution evolves and adapts over time, staying relevant and efficient.

-

Non-technical friendly UI: A crucial layer that allows business users to control, train, and improve system performance without deep technical expertise. An emphasis on the No-code development philosophy is essential.

Layer 5: Integrations for enterprise GenAI

As your solution scales, you're going to find more and more use cases for it, and this means you should consider from the start whether your solution will readily integrate with different systems.

It's crucial to consider the embedded AI approach, where solutions not only integrate but also offer intelligent assistant capabilities within leading enterprise platforms such as Salesforce, Microsoft Dynamics, Confluence, or Genesys. This transformative feature significantly enhances adoption and streamlines processes.

You can build your own integrations from scratch, use third-party tools like UiPath or Blue Prism, or rely on the integrations already built into a complete solution.

Building your own integrations. You can use data connector tools like Airbyte or lower-level event streaming tools like Apache Kafka to move data between different systems and make it available to your GenAI solution.

Buying an off-the-shelf data automation tool. Platforms like Zapier have mature connections to nearly every platform imaginable, but they are primarily designed for consumer use, not enterprise, and their pay-per-use pricing can lead to budget shocks as you scale. Other options like Workato are more enterprise-ready, but will require time for your team to onboard and learn.

Bonus Layer 6: Deployment, monitoring, and observability

We mentioned five layers, but once you've built your complete solution, you're still not done. You'll need to deploy and monitor your solution.

The world changes and how people interact with your system changes too, so just because it works well in a specific week does not mean that it will continue to work well in the future.

You'll need to constantly measure how your system performs on different metrics, including accuracy and performance. This includes monitoring each component of the system individually, as well as the platform as a whole.

Unlike monitoring traditional solutions, GenAI solutions are harder as they produce non-deterministic outputs, so you can't easily match what it's producing against what you expect.

Solutions like LangSmith will help you monitor quality over large test suites. Arize and friends will let you monitor your models directly, and Galileo will help you scale the creation and evaluation of experiments to help you improve and maintain your platform.

The bottom line on build vs. buy enterprise GenAI

In the fast-moving and high-stakes race that is GenAI, it's hard to predict which LLM will come out on top, which AI service providers will be responsive to the demands of an evolving market, or how regulatory changes and developing industry standards will impact the AI landscape in the short and long term.

Building a GenAI solution for your business from scratch is only realistic if your business is AI.

The hybrid approach

If you're considering a hybrid strategy for deploying enterprise GenAI solutions, analyze the strengths of both the off-the-shelf and custom components so that your business benefits from the best of both worlds while mitigating potential risks.

-

Identify the specific goals and requirements of your GenAI initiative. Understand where off-the-shelf solutions can meet your needs and where custom development is necessary.

-

Assess the ease of integration between external GenAI services and your existing systems. Seamless integration is crucial for a hybrid strategy to work effectively.

-

Ensure that any external GenAI service complies with your data privacy and security requirements. Understand how data will be handled, stored, and processed by third-party services.

-

Evaluate whether the solutions (both off-the-shelf and custom) can scale according to your business growth and meet your performance benchmarks.

-

Consider the total cost of ownership, including development, maintenance, and subscription fees for off-the-shelf products. A hybrid approach may not be cost-effective in the long run if you fail to factor in unexpected challenges or changes.

-

Ensure you have access to the necessary technical expertise, both for integrating external services and for developing and maintaining custom solutions.

-

Choose reputable service providers with a track record of reliability and robust support. Their ability to support your business needs over time is crucial.

-

Verify that the GenAI solutions, both purchased and developed, comply with relevant regulations and laws, such as GDPR or CCPA, especially when handling sensitive data.

-

Consider how easy it will be to update or replace components of your GenAI solution as new technologies emerge. A flexible architecture can help accommodate future changes without significant overhauls.

-

Reflect on the ethical implications of your GenAI applications, including bias, fairness, and transparency. Ensure your approach aligns with ethical guidelines and societal expectations.

-

Define clear metrics for evaluating the success of your hybrid GenAI strategy. Continuous monitoring and evaluation will help you adjust your approach as needed.

Buying an enterprise GenAI solution

The best choice for most enterprises is to buy an off-the-shelf solution or partner with an enterprise GenAI service provider so that you can avoid the high upfront costs and operational distractions of building your GenAI solution and remain focussed on your business's core competencies and strategic goals.

Some things to consider as you weigh up your options:

-

While it's not feasible to build your own foundational LLM, you don't want to find yourself locked into an inferior model either. Your GenAI partner should offer the flexibility to choose the LLM that best suits your needs and switch models when necessary to maintain a competitive edge.

-

Although it seems like you're spoilt for choice when it comes to buying a RAG platform to integrate into your in-house GenAI solution, the reality is fraught with pitfalls. To fully leverage RAG technology, consider purchasing an integrated GenAI solution with advanced RAG features designed to handle complex data contexts and provide superior RAG outcomes without the need for custom development and maintenance.

-

You might already be using several tools that all offer their own frontend for an AI chat-powered interface. Make sure you understand exactly what each tool can do before settling on one as it's very easy to get locked into your first option, only to discover its limitations down the road.

-

Compliance and security are often afterthoughts, but you must understand the risks and requirements upfront if you don't want to get burned or face long delays in releasing your own solution. If you are working with a GenAI partner, make sure you understand what certifications they have, and whether or not these can be carried over to your firm as part of the partnership.

-

There's no shortage of integration options available, but connecting disparate platforms is always going to be one of the main causes of headaches for your team. APIs change and data connectors are brittle, so companies often underestimate the maintenance costs of building their own integrations. Before closing the deal with a GenAI partner, make sure that you understand upfront exactly what integrations they offer, and any limitations that might apply to these integrations.

Key advantages of buying an enterprise GenAI solution

While it may seem that building a GenAI solution in-house is ideal—ensuring a perfect fit with existing processes and addressing your specific needs and opportunities in a way that off-the-shelf solutions might not—it's easy to underestimate the timelines and costs involved in developing this kind of niche technology.

Some compelling arguments for buying an enterprise GenAI solution include:

-

Time to market: Building your own GenAI solution may bring you the flexibility and customization you need, but it can take years to develop a comprehensive platform. Consider that some of the time-consuming aspects of building a GenAI solution include:

-

Research and planning in terms of what type of AI model will best suit your business needs, data requirements, and potential technical and regulatory requirements.

-

Data collection and preparation in high volumes to train the model on—collecting, cleaning, and labeling sufficient data is critical for the quality and relevance of the model's performance and accuracy but is one of the most time-consuming stages of developing GenAI.

-

Testing and validation against unseen datasets to assess a model's performance, accuracy, and bias—an iterative process that requires many rounds of adjustments and retraining.

-

Integration and deployment of a GenAI solution into existing systems and workflows that may not be designed to accommodate AI-driven processes, and setting up the necessary infrastructure like cloud-based services, on-premises servers, and privacy and security measures to protect the data.

-

Compliance and ethical considerations.

-

Partnering with a GenAI platform will have you reaping the benefits of a GenAI solution in a matter of weeks rather than years.

-

Competency: Finding AI engineers is difficult. Even companies that have well-developed in-house software competency are surprised to find that GenAI development has other challenges like big data management, specialized hardware, and challenging latency requirements for inference.

-

Cost: The cost of GenAI extends beyond initial set-up costs to include ongoing expenses such as data pipeline development, process optimization, and technology to keep up with the latest industry developments. External GenAI partners have already made these investments and developed sophisticated pipelines and processes that you can leverage cost-effectively.

-

Risk: It's challenging to fully control and set boundaries for GenAI solutions, and policies around these emerging technologies are constantly changing and vary considerably between regions. It might be tempting to shortcut innovation by opting for "quick-and-dirty" methodologies to implement GenAI in your business, but this often proves to be problematic—more dirty than quick—in the long run, especially as regulatory frameworks and data protection laws like GDPR introduce complex compliance challenges. A GenAI partner will have safeguards in place to protect you from risks you might not have even considered.

-

Flexibility: In-house software solutions often have a lower risk of vendor lock-in than purchased solutions, but this is not the case for GenAI. A GenAI partner will usually let you switch between LLM providers at the push of a button while building your own solution with the same flexibility is not feasible.

Get in touch

Delivering top-notch enterprise solutions, Enterprise Bot is at the forefront of conversation bot technology, helping our customers delight their customers.

Collaboration is at the heart of the Enterprise Bot business and one of our team members would be happy to answer your questions about enterprise GenAI or discuss your business needs.

Enterprise Bot turnkey GenAI solutions for your business feature:

-

A wide variety of foundational LLMs out of the box.

-

A sophisticated, patent-pending RAG solution that makes ingesting your custom data easy.

-

A customizable frontend chat widget, as well as email, voice, and other interfaces.

-

Full SOC 2 and GDPR compliance, power anonymization, and security features.

-

Integrations into most platforms.

Get a demo today to find out how we can bring conversational AI to your company.