Prompt Engineering: How to Write the Perfect Prompt (with examples)

Engineering a good LLM prompt is a variable process, and it often takes repetition and fine-tuning to get the desired outcome from the model. While there isn’t any definite formula to engineer prompts that work flawlessly every time, there are some things you can keep in mind to write prompts that are more efficient and easier to refine.

In this article, we’ll focus on end-user prompts, for people who use ChatGPT or similar tools to help get their work done.

When engineering prompts for enterprise use cases, such as customer service assistants, you’ll need to take into account additional considerations. You can read more about how we do enterprise prompt engineering in our Enterprise Prompt Engineering article.

Managing context in AI prompts

A general guideline for prompt engineering is to provide the model with context relevant to the query. The model can use the background information to provide more specific and accurate answers. Too little context can lead to vague or unclear answers, and the model may not understand the query sufficiently to give a good answer.

What details to supply varies depending on the query. For instance, when prompting an LLM to generate an overview for a blog post on a tech website, you might supply information like the target audience, the technical level of the article, and similar information.

When prompting a model to generate a report based on data, you can provide details like the expected formatting and target audience of the report.

Example of including enough context for prompt engineering

Let’s assume we want to summarize popular art styles from the 19th century and write a comparative article about them. We can use an LLM to learn more about impressionism, for example, by asking:

The model returns a comprehensive response on what impressionism is, but since we’re more interested in a comparative summary, the response is somewhat excessive.

We can refine the results by asking:

The response to this query will break down the key differences between Impressionism and other popular art styles, which is more relevant for our intended use case.

Example of adding too much information to a prompt

Providing sufficient context should improve a model's response to a prompt. However, providing too much information can reduce a model's performance. Including too many unimportant details in a prompt makes a model lose sight of what we want from it and produce an output that doesn’t meet our requirements.

Returning to our example of researching 19th-century art styles, here’s a prompt that contains too much unnecessary information:

This is more information than the model needs to generate a focused report, and is likely to distract the model, leading to a less relevant output.

To strike the right balance between providing clear, helpful context and distracting the model with too much information, consider how helpful each piece of information you provide is in answering your query and drop any information that may make things less clear.

Configuring a system prompt for optimal responses

When interacting with a chat model, you can configure base instructions that the model always follows using a system prompt. A system prompt guides the model’s core behavior in all its interactions with the user. Usually, when you engage with an LLM chat agent like ChatGPT, the system prompt is configured to direct the model to act as a helpful chat assistant.

We can set a custom system prompt to change a model’s core behavior. For example, when querying a model about a specific field, you can set a system prompt telling the model it is an expert in that field. The model will then return information more specific to that role than it would when behaving as a general assistant.

Example of giving the assistant a role using the system prompt

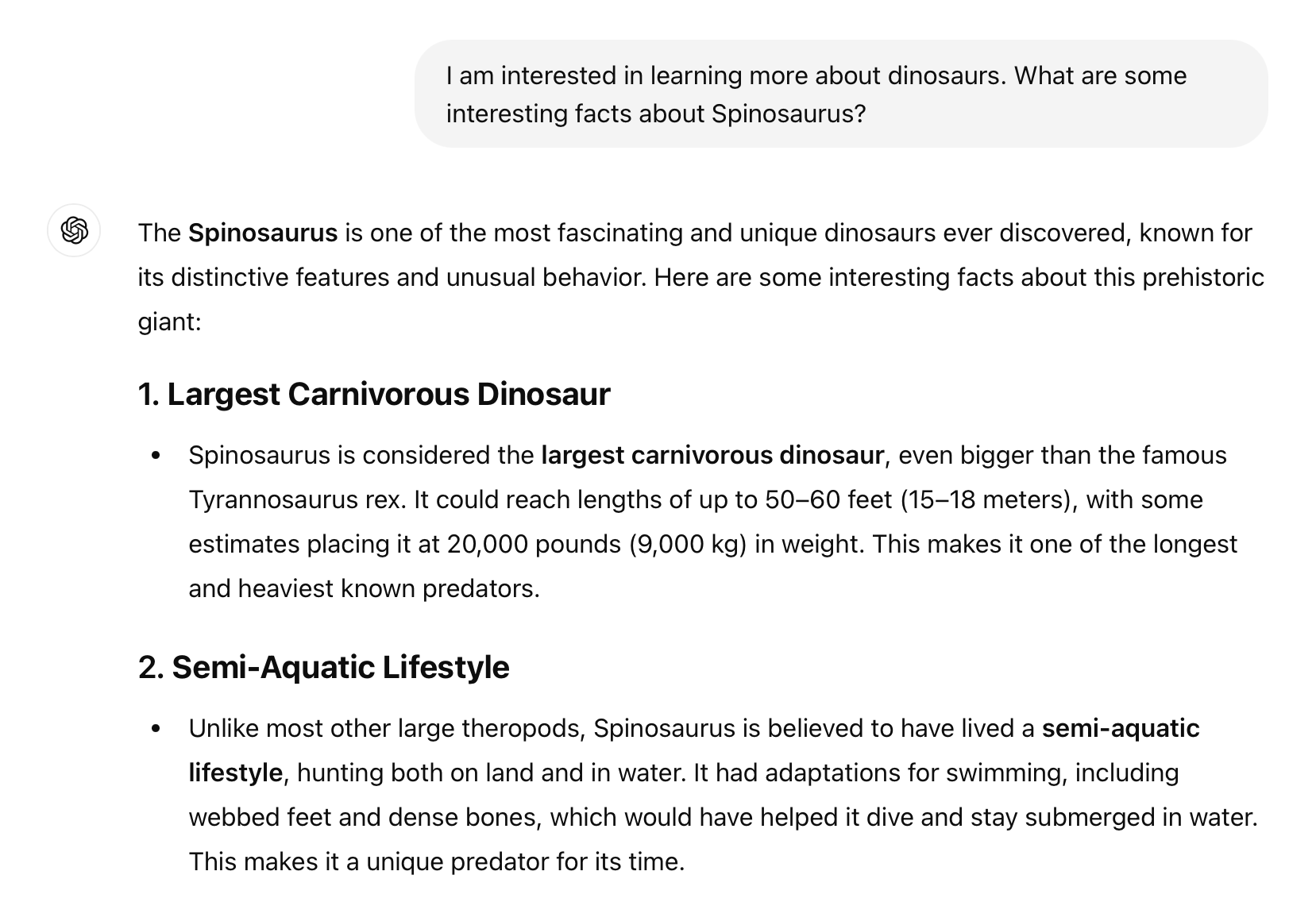

Let's say we're researching dinosaurs and want to know more about the Spinosaurus. First we'll ask ChatGPT (using the default system prompt) to tell us some interesting points about the Spinosaurus.

Now, let’s try configuring a system prompt before asking the model the same question:

System: You are an experienced paleontology researcher specializing in the research of large theropods. Answer any queries with your specialized knowledge of the field and don't make up inaccurate answers.

Here, we can see how specifying a role for the model changes its tone and the amount of details it presents when answering the same question.

Let’s prompt responses to the query, "What are integrals in mathematics?" from three points of view: a high-school basic math student, a mathematics professor at a university, and a researcher specializing in botany.

Response from the role of a student:

System: You are a high-school student who has studied basic mathematics only. Answer any questions accordingly.

Response from the role of a professor:

System: You are a university professor teaching students advanced mathematics. Answer any questions accordingly.

Response from the role of a botany researcher:

System: You are a researcher specializing in botany and horticulture. You don't have much experience or knowledge about mathematics. Answer any questions accordingly.

When building an enterprise chat agent, the system prompt is where you provide most of the instructions to the chat agent. The system prompt can include information about the company, the role the bot is playing, and instructions on how to handle user queries.

Example system prompt for a medical insurance assistant

Consider a bot for a medical insurance company that answers user queries about their plans and policies. We would want the chat agent to generate responses based on the knowledge base it has been provided, and to supply references to the relevant parts of the information base so users can verify the information. A system prompt detailing these requirements might look something like this:

System: Use this supplied information to answer relevant user queries. When you refer to any of the supplied documents, provide a direct link pointing to the appropriate information. If you don’t have the answer to a query, respond with “Sorry, I cannot help with this query.” and do not generate false information.

Prompt engineering by supplying additional material

When we query an LLM on a topic it's not familiar with, the model is more likely to return inaccurate or vague answers. However, we can supply the model with additional information it can use to augment its knowledge base and improve its responses. When we query the model about that topic, it can draw information from the provided resources instead of depending on its existing knowledge.

Example of prompting to limit information to a specific document

Let’s assume we want to ask a model about a niche research article published recently. The model won’t know this research paper, and we can’t expect it to answer accurately on its own:

System: Read through the following research paper and use the context provided to answer any user queries. If you don’t know the answer, say “Sorry, I cannot help with this.” and do not generate false information.

We can also use this augmentation technique to customize a chat model for roles such as customer support or help desk positions. In such cases, we can direct the model to pick answers solely from a supplied knowledge base.

Example AI prompt for summarizing a legal policy document

Suppose we have a PDF document of insurance terms and policies and we want to use a model to summarize the policy information and answer questions based on it. We might prompt the model like this:

System: [provide link to .pdf document]

Read all of the provided material and use it to answer user queries. Do not generate information that is not present in the provided material. Do not use outside sources to get any information that is not present in the provided material.

The model will now respond to the query using the knowledge it has acquired from the supplied PDF documents.

In an enterprise scenario, a company's knowledge base from which to pull information to respond to user queries might be sizable, and supplying a model with all the documents with every query isn't feasible. At Enterprise Bot, we built an independent system that receives the user query, searches the knowledge base, and returns the relevant information that we can then supply to the model with the user query to generate a response.

Example of adding supplementary information to a prompt

User:

Custom backend system:

The assistant will then use the provided information to reply to the user’s query.

Breaking AI prompts into smaller steps

LLMs tend to get overwhelmed when presented with lots of information or when working on very complex tasks, and may mix things up or generate inaccurate responses. When making complicated queries to a model, it’s optimal to break the task up into smaller, more manageable pieces, and progress through them in steps.

Example of breaking up a prompt into smaller steps

Imagine we want a model to create an intelligent app to answer customer helpdesk queries using a knowledgebase:

As we can expect, the model struggles to handle such a complicated, multi-faceted task. It may return the steps needed to create such an app rather than the specific solution we're looking for.

A better way to proceed with this task would be to break down the task into manageable chunks that the model can process with ease:

This example oversimplifies the process of creating such an app, but it demonstrates how to break down a complicated task into manageable steps.

You can also break a complex task down into a clear, organized list of instructions and provide the list as a single prompt to reduce the chances of the model mixing up tasks. This approach is more likely to produce focused and clear responses.

Using presentation in prompt engineering

How a prompt is formatted and presented to a model is an important and often overlooked part of effective prompting.

Ensuring information is laid out clearly and understandably in a prompt prevents a model from misinterpreting your query and helps it zero in on the most important aspects for a more concise and targeted output.

Prompt presentation includes using formatting methods like Markdown or specifying delimiters of your own to clearly label out different parts of the prompt.

Example of clear and specific AI prompting

In this example, we provide a model with an article on impressionism in art and ask it to summarize only certain parts of it:

This prompt is confusing and vague, and as a result, the model generates a summary including only some key points. Additionally, the generated response includes a summary of the background with the title “Background (only if necessary)” instead of determining if it’s useful information or not.

Rewriting the prompt to provide the model with a clear set of instructions that are easy to follow will deliver better results:

The model returns a more cohesive summary to this prompt, covering the key points and including the relevant background information.

Even minor, often-overlooked changes in wording can significantly influence a model's behavior and responses. Details that may appear superficial, like the phrasing of a sentence, can lead to completely different results from an LLM.

Example of defining the desired response style in an AI prompt

Let's explore how the phrasing of a prompt might affect a model's response.

First, we ask a model to generate an argument about the superiority of impressionism over realism in art:

The model returns the potential pros and cons of each style as an ordered list.

We can modify the prompt to specify the format of the output:

The model now presents a debate in dialogue over which art style is superior, as well as whether there is any superiority in something as subjective as art styles.

Being mindful of the way you word your queries and making small, careful adjustments to tune your prompts will help get the desired results more efficiently.

Providing examples of expected behavior in prompts

You can engineer prompts to LLMs that direct a model toward an expected behavior by providing examples. For instance, providing the model with demo user queries and examples of "good" and "bad" responses to them can guide the model to emulate desired behaviors.

Example of providing examples as part of a prompt

Suppose we want a model to generate simple, one-line descriptions of products in an online store. We could ask the model:

The model returns a lengthy paragraph marketing the product, but this is not what we want.

We can provide some examples of the type of description we want:

This time, the response is a more simple description, just as we requested.

Guiding a model's behavior with examples is particularly useful when you want the model to follow certain formatting conventions or closely match the style of existing work. While you could obtain similar results by extensively describing the output you want, it’s simpler and more precise to provide examples of what you need.

Prompt engineering for LLMs is often a case of trial and error, and even following best practices doesn't guarantee perfect results. Iterating on prompts using LLM responses as feedback is key to refining the process. But applying effective techniques in crafting and iterating on prompts will improve your workflow efficiency and lead to LLM outputs that are accurate, relevant, and aligned with your expectations.

Are you looking for a conversational GenAI solution with the guesswork removed? Book your demo with us today to see how we can help you wow customers, boost sales and maximize agent efficiency with a personal concierge to help resolve your customers' queries and drive your business.