Revolutionizing Chatbot Technology: How Enterprise Bot Leverages RAG For GenAI Applications

The integration of Large Language Models (LLMs) like OpenAI's ChatGPT and Anthropic's Claude in business applications has demonstrated immense potential, albeit with inherent limitations such as static data, lack of domain-specific knowledge, and the black box nature of LLMs. To address these challenges, a new method, Retrieval Augmented Generation (RAG), has emerged as a viable solution.

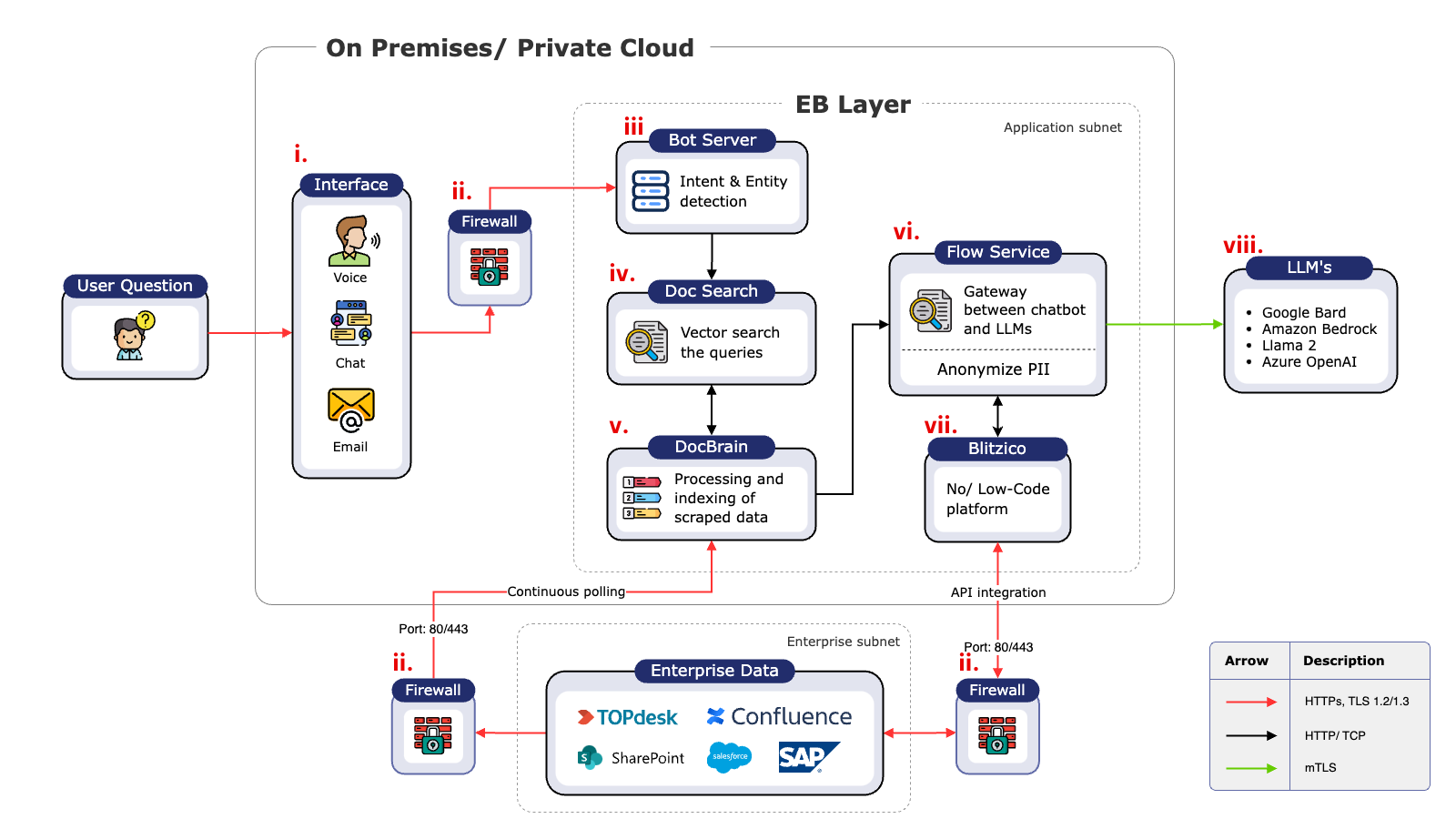

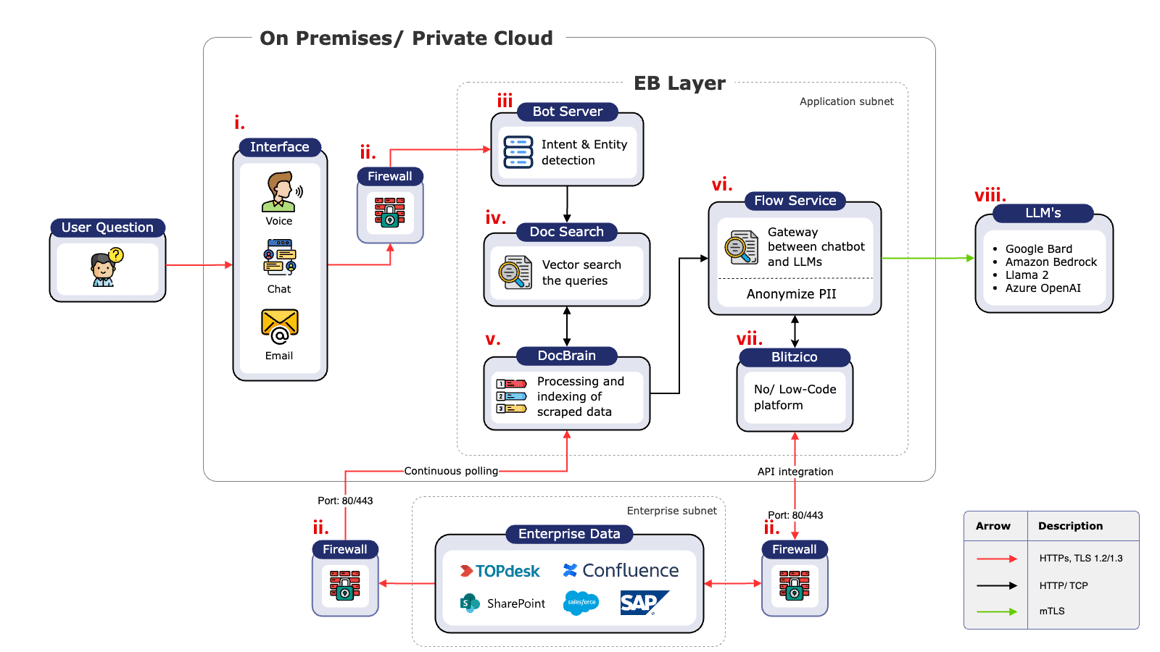

Enterprise Bot's architectural framework leverages RAG to enhance the capabilities of LLMs, ensuring efficient data retrieval and response generation from varied enterprise data sources like Confluence and SharePoint. This document elucidates the architecture, data flow, and benefits of employing RAG through the Enterprise Bot solution for augmenting GenAI applications.

RAG delivers context and domain-specific data to your GenAI business application

Enterprise Bot is a leading omni-channel conversational AI solution that provides personalized virtual assistants using GenAI and process automation powered by any Large Language Model like ChatGPT to revolutionize customer and employee experience.

The architecture of Enterprise Bot is meticulously designed to optimize RAG processes, thereby bridging the gap between static LLMs and dynamic, context-aware response generation.

Enterprise Bot's Architecture Overview

Component Description:

i. Interface: The initial point of interaction where users input their queries, be it via chat, email, documents, or voice calls.

ii. Firewall: Ensures authorized traffic, protecting the system from unauthorized access and potential security threats.

iii. Bot Server: Analyzes user queries for intent and entity recognition, determining the subsequent workflow.

iv. DocSearch: A Hybrid and Semantic search engine, transforming user queries into search vectors and fetching relevant data from DocBrain.

v. DocBrain: Ingests and updates data from various sources, ensuring the content remains fresh and accurate.

vi. Flow Service: Acts as the RAG pipeline and the gateway to the LLMs, providing a seamless flow of information.

vii. Blitzico: A no/low code platform for augmenting information or executing tasks via APIs into various systems or RPAs as needed.

viii. LLM: The Large Language Models like ChatGPT, Azure OpenAI, Llama2, etc., which generate responses based on the provided prompts.

ix. Training/Validation: Stores all data for analysis, correction, and model fine-tuning to improve the system over time.

Enterprise Bot leverages the RAG advantage to ensure context-awareness, accuracy and auditability

Enterprise Bot uses RAG in its architecture to address the inherent limitations of LLMs by fetching up-to-date or context-specific data from enterprise data sources and making it available to LLMs during response generation.

Advantages include:

a. Up-to-Date information: RAG ensures that LLMs have access to the most recent data by interfacing with vector databases containing domain-specific proprietary data.

b. Domain-specific context: Provides LLMs with the much-needed domain-specific information, improving the accuracy and relevance of generated responses.

c. Reduced hallucinations: RAG prevents incorrect or fabricated information generated by LLMs due to lack of context or outdated data.

d. Auditability: RAG enables GenAI applications to cite sources, making the process transparent and easier to audit.

%20(3).gif?width=1160&height=654&name=Enterprise%20Bot%20ChatGPT%20(Facebook%20Cover)%20(3).gif)

RAG is more cost-effective than other approaches

The RAG-driven Enterprise Bot solution presents a cost-effective approach compared to alternatives like creating a new foundation model or fine-tuning existing models. It utilizes semantic search to retrieve relevant context, reducing the need for extensive data labeling, constant quality monitoring, and repeated fine-tuning.

Furthermore, Enterprise Bot's patent-pending technology, DocBrain, revolutionizes the current intent-based approach of the conversational AI industry. Traditional platforms require extensive training with over 200 intents and at least 50 examples, often coupled with the recreation of already existing website FAQs. DocBrain overcomes this by autonomously creating knowledge graphs and omni-channel bots from information sources such as websites, PDFs, Confluence, TopDesk, and other repositories. This means that responses are processed without manual intent creation, reducing the time to market by 80%. Additionally, the system updates itself based on website or document changes, minimizing maintenance effort and eliminating the need for CMS re-creation.

Unlocking Conversational AI interfaces: How Vector Databases Power Semantic Searches with Natural Language Queries

By employing semantic search and vector databases, Enterprise Bot facilitates a deeper understanding of user queries, enabling more accurate and contextually relevant responses. This process involves converting domain-specific data into vectors using an embedding model, storing these vectors in a database like Pinecone, and performing semantic searches to retrieve the most pertinent data.

Revolutionizing GenAI: Enterprise Bot's RAG-Driven Framework Overcomes LLM Limitations for Enterprises

Enterprise Bot, through its RAG-driven architecture, provides a robust solution to the limitations of current LLMs, making GenAI applications more accurate, efficient, and cost-effective. By continually updating its database and providing domain-specific context to LLMs, it significantly enhances the performance and reliability of GenAI applications in a business setting.

Stepping into the future of Customer and Employee experience with Enterprise Bot's omnichannel, intentless, LLM-agnostic solution

Discover how our advanced Conversational AI and process automations, including the trailblazing DocBrain technology, can elevate your customer interactions, streamline service processes, and unlock unprecedented levels of customer satisfaction. It's time to bring the customer service experience into the future — your future. Book a demo now. Your journey toward AI-enhanced customer engagement starts here, with Enterprise Bot. Don't wait — seize the AI advantage today!